Part IIIB/III: ACO for Retailers: The Agentic Commerce Optimization Playbook

Follow our Nine-Step Playbook to Optimize your Product Catalog for Agentic Commerce, Own the Product Cards and Dominate this Holiday's Agentic Sales.

This is Part III of our three part series on GenAI Engine Optimization for retailers. This builds linearly and we’ve added a ton of subscribers since we started so I strongly recommend going back to Part’s I and II to get those concepts before we jump into the Part III

Part I - (9/3/25) - What is GEO→ACO for Agentic Commerce and What’s the Goal?

Part II - (9/10/25) - The 13 Agentic Commerce Pitfalls: with real-world examples.

Part IIIA - (10/21/25) Agentic Commerce Optimization Foundations: ACO 9-Step Sequential System, Canonicalization, Own the Product Card, Content+Context (here)

Part IIIB (10/29/25) The Agentic Commerce Optimization Playbook (**You are Here → The Grand Finale! **): 9 Easily Implementable Strategies and Tactics to dramatically improve your Agentic Commerce readiness, avoid the 13 pitfalls from Part II , and optimize the Seven Agentic Shopping Engines for maximum sales in Holiday ‘25.

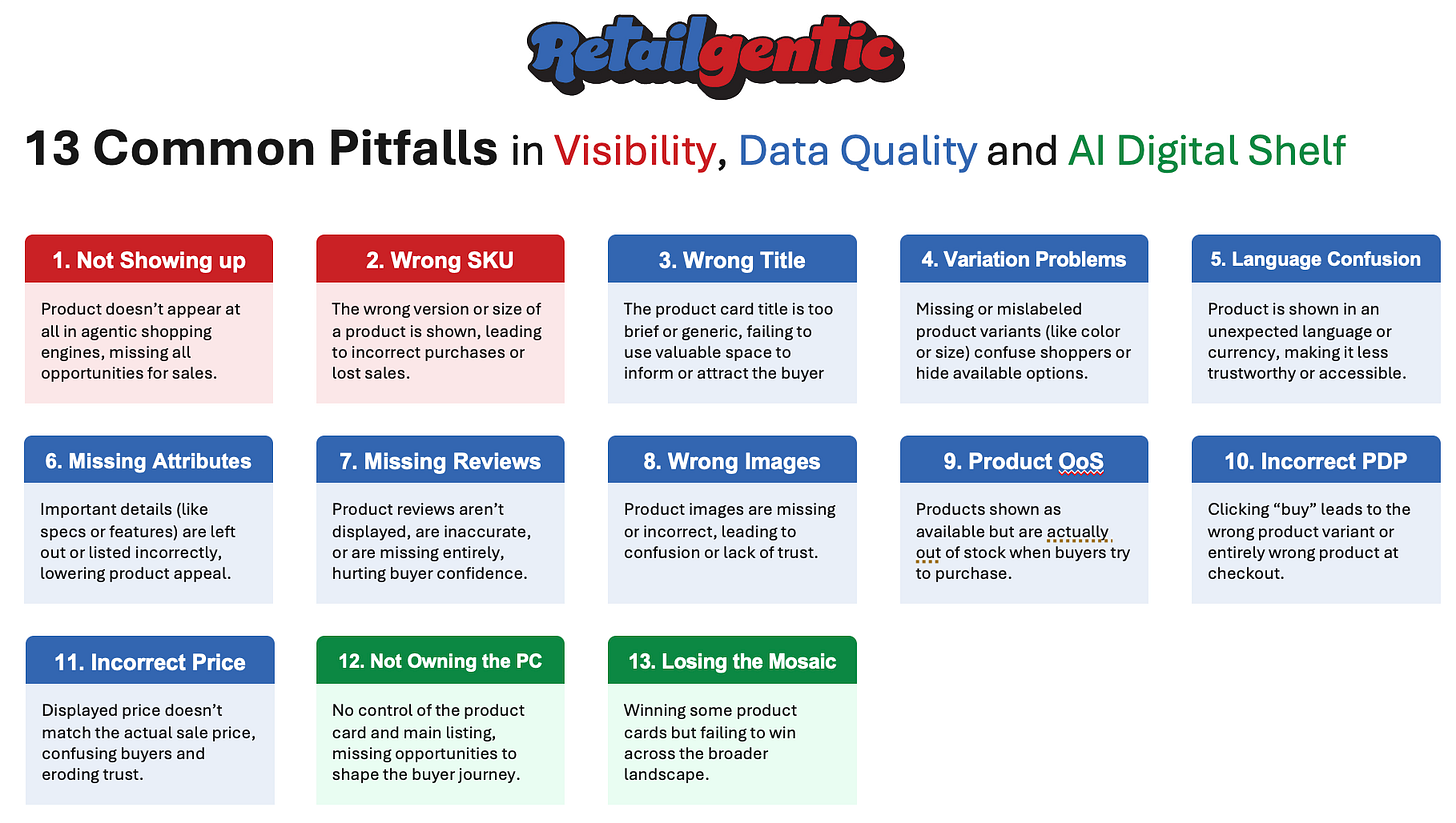

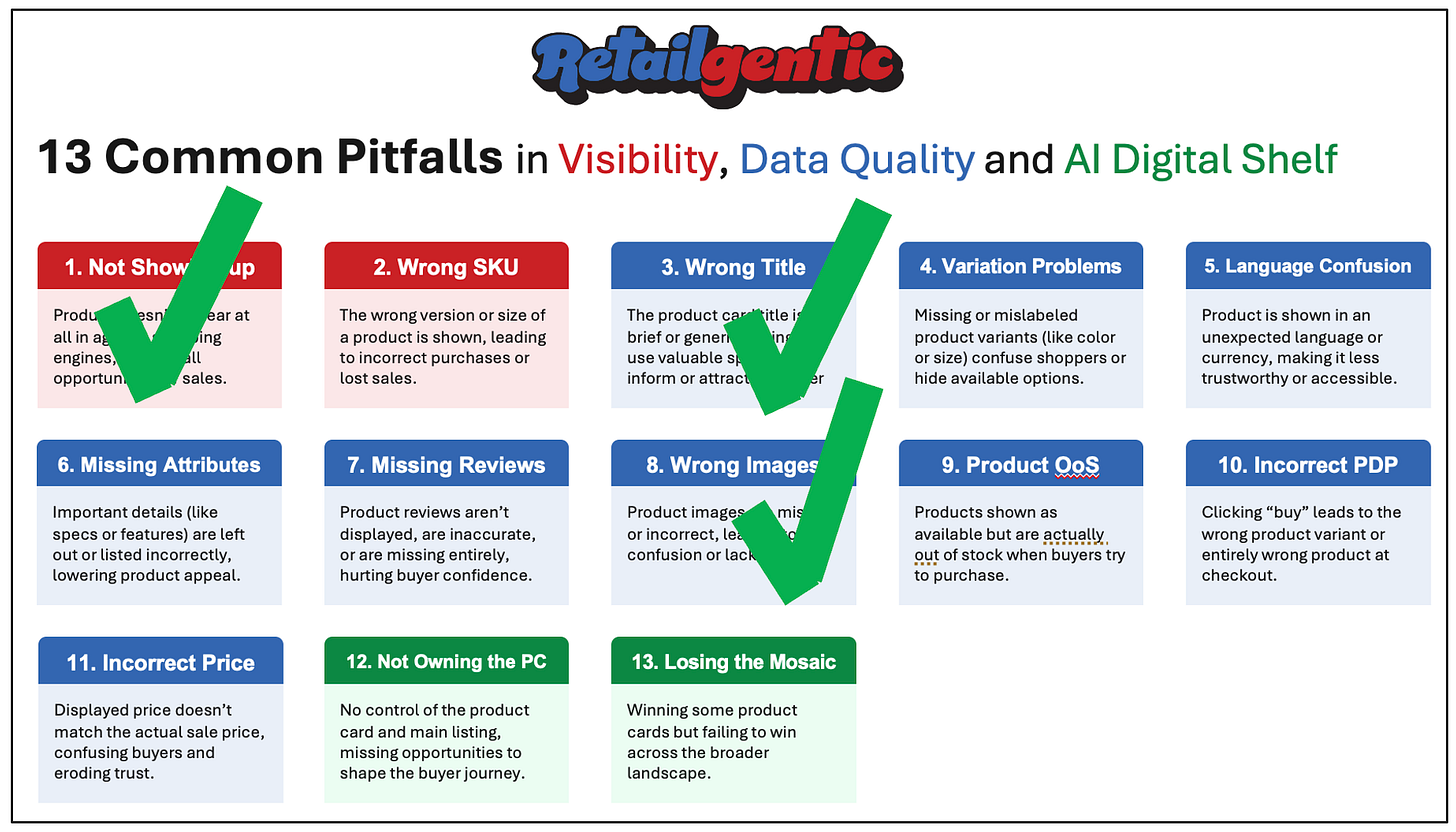

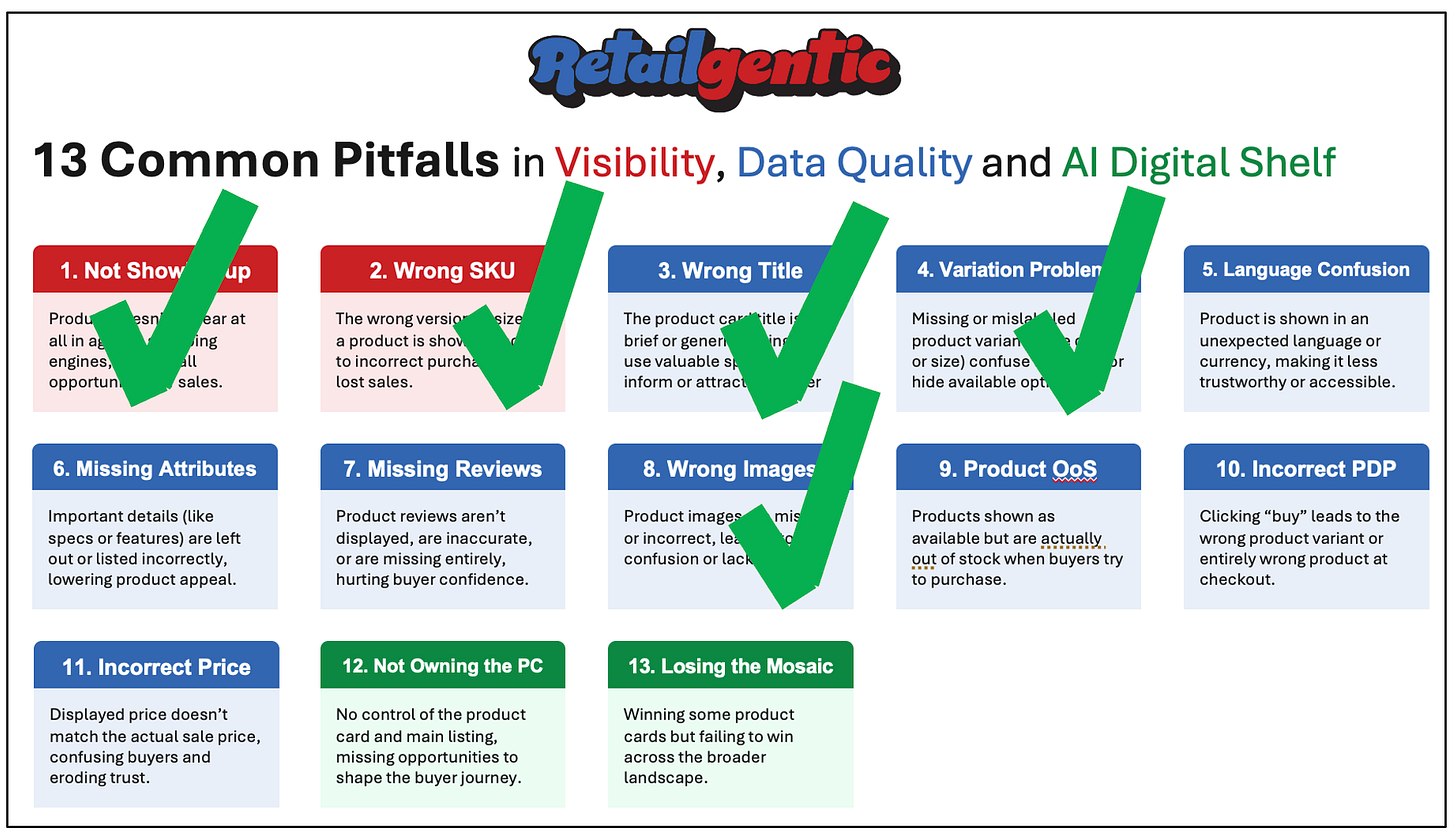

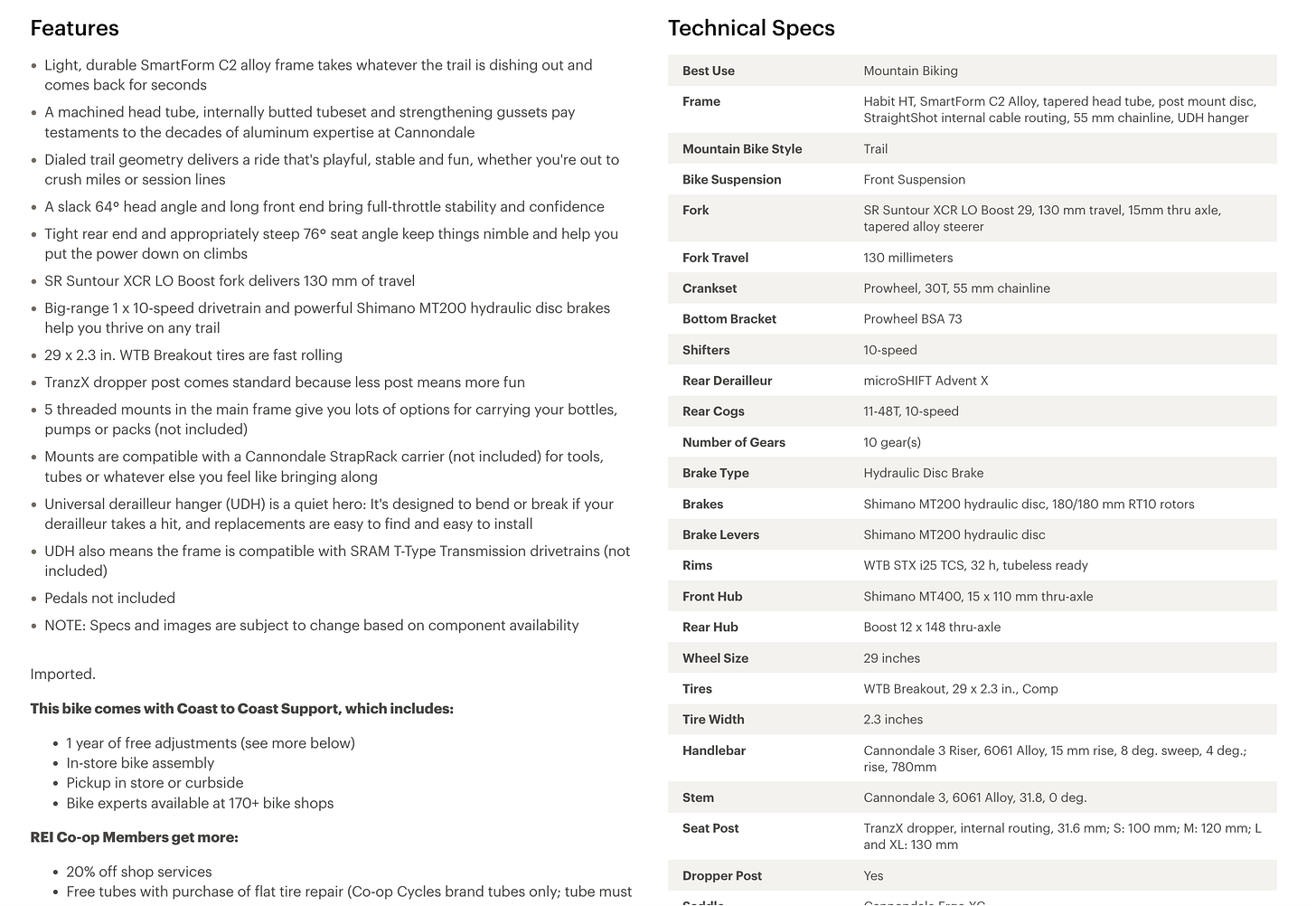

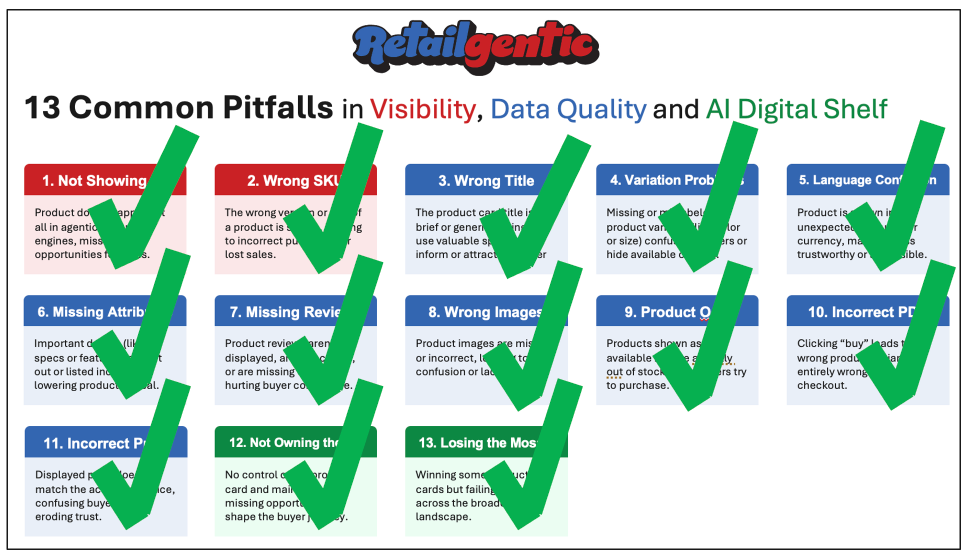

13 Agentic Commerce Common Pitfalls Refresher

As a refresher, in part 2, we covered 13 common Agentic pitfalls:

Foundational Concepts Recap

Then in Part IIIA we introduced these four foundational concepts:

Focus on Owning the Product Card - Do this phase first, trust me this is the path to long-term success and how you will win this race. In Part I and II, we showed you that most likely the majority of your SKUs have fallen into one of the 13 pitfalls and you are not getting the lion’s share of traffic you should be from Agentic Commerce.

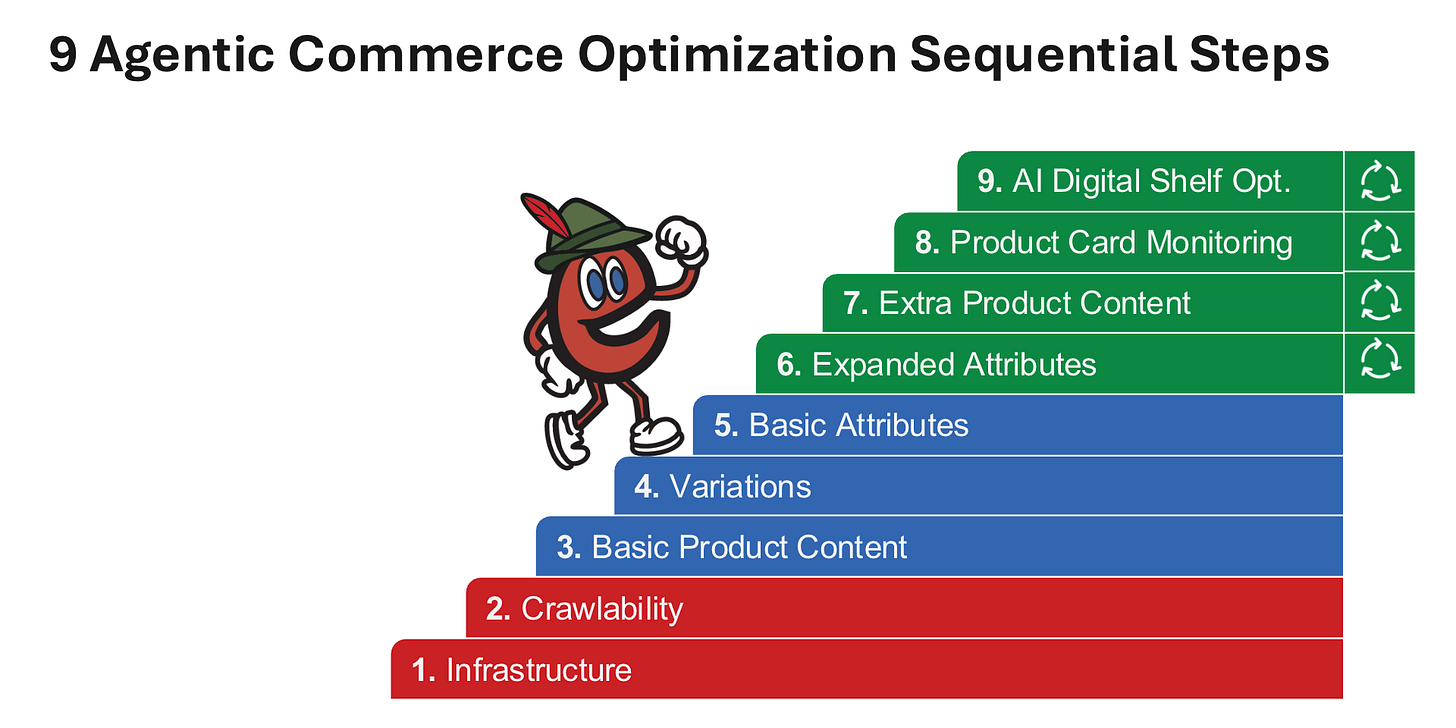

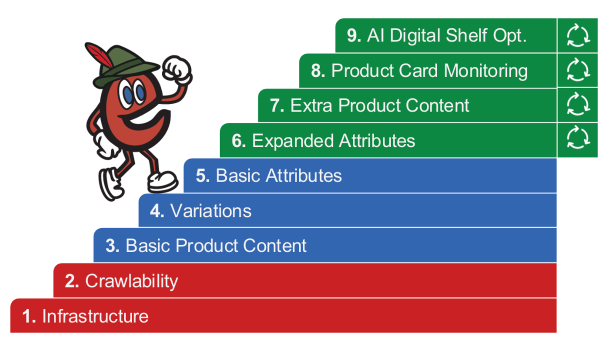

Nine Step Agentic Commerce Optimization Playbook 9 step program - To get your products out of the pitfalls, implement the ACO Playbook steps 1-5 and then continuously improve steps 6-9 until you feel like you have reached the point of diminishing returns.

Content and Context - AEOs/LLMs crave as much product-level content and context that you can give them. Don’t fall into the trap of generating on or off-site content that is being pushed by GEO vendors. First, it’s not going to result in an increase in transaction volume if you do any GEO work before going through the ACO Playbook’s 9 steps, and second, if you do anything shady, I think it’s clear OpenAI isn’t going to tolerate that. Focus on product-level data that a human would read if they had time.

Canonicalization (c14z) - The process Answer Engines go through to create canonical SKUs and match them up with offers.

The Retailgentic ACO Playbook

Let’s take each of the nine optimization steps introduced in IIIA from bottom to top and go over the best practices within each. Together these 9 Strategies and the tactics within will give you a comprehensive ACO Playbook to start implementing regardless of if you are a retailer, brand or agency and also if you have one SKU or millions, the same playbook applies, but, obviously, is a heavier lift because you need to follow them ‘per SKU’ and we’ve discovered each SKU’s optimizations as you get into steps 3+ are very different.

Infrastructure - Step 1

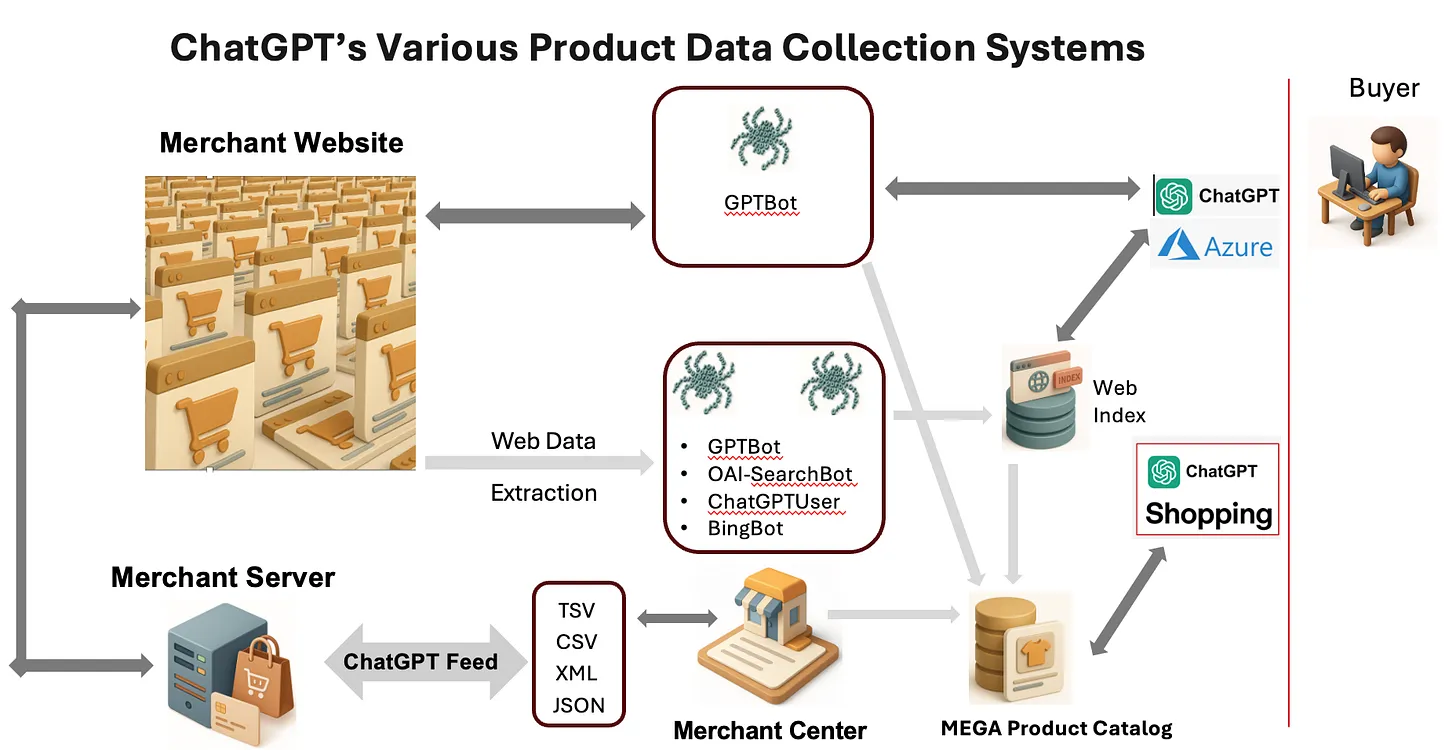

For Steps 1 and 2: Infrastructure and Crawlability, I’m going to go back out this diagram we used to explain how ChatGPT Checkout datafeeds work in concert with the crawlers because it’s applicable here→

In this diagram imagine that your MIS/IT team decided that GPTBot and OAI-SearchBot and ChatGPTUser are evil and put a wall around the merchant website and the merchant server. The Answer engine’s crawler’s couldn’t read your PDPs, they wouldn’t have your products in their MEGA Product Catalog and so-on. If the Agentic Commerce Engine is going to send an agent on behalf of the buyer to purchase something, you’re going to block a buyer, and so on.

Both the Infrastructure and Crawlability sections are table stakes to working with agents. They need access to your product catalog via the PDPs (infrastructure) and they need help to make sure they are getting the width (all the catalog SKUs) and depth (all the data they want which is a mile deep.

The very first step is you need to make sure somewhere your technology stack the Agentic Commerce crawlers are not being blocked. When a web browser (human or non-human) visits your site it identifies itself through the ‘user agent’ field. You need to make sure these are white listed or not blocked at:

robots.txt

Your DNS provider

Your Cybersecurity/Firewall/DDOS protection provider (e.g. CloudFlare)

Your CDN (Akamai, Cloudflare, Cloudfront, Fastly, Google Cloud CDN)

Any other infrastructure your site, or your ecommerce host/platform utilizes that could be blocking these bots.

You can find a really great list put together by this SEO guru here. I recommend reviewing the AI focused ones, there are 3-4 on there you can omit. Whatever you decide, I think someone in the ecommerce/marketing/merchandising org should be tasked with liasoning to IT/MIS to make sure you all know who is on the list and who is off, because some day, Grok is going to come out with an awesome Agentic Commerce feature, and you’re going to wonder why your SKUs are mysteriously absent.

What About the ChatGPT ACP Feed?

Yes, this is good for ChatGPT and maybe a way to keep blocking and still get your product data. We haven’t been able to definitively test that this works yet, plus remember you’ll be blocking the other six Agentic Commerce Engines if you go this route. It’s better to let the digital shoppers into the store.

How do I Check If I am Blocking the Good Bots?

The first hint you are blocking is if all of your products aren’t showing up in a Agentic Commerce Engine. Even if your site has very bad setups for the steps above this, you will have some products showing up. What complicates this is, if you look at the ChatGPT diagram above, you’ll notice BingBot. Well the engines all partner with different companies and will even do things like crawl Google Shopping for product data. That makes it a bit harder to diagnose, so when you see spotty SKU coverage on an engine it could be any of the step 1-5 items playing a role. To rule it out, most webmasters have access to developer tools where can tell it- go to my website and say you are OAI-SearchBot. If your website returns a ‘403 (forbidden)”, you now know with certainty you are blocking that bot at this infrastructure layer.

How Can I See What The Bots are Doing?

Most web analytics tools can be configured to show you what’s going on here, but they tend to hide it by default so you only see how humans are interacting with your site. Also, the developers in your company can always get to the raw log files for your web server(s) which are unusable in that format, but there are many tools available to help you read those tea leaves and recreate the session and what they did.

Google Analytics is free and most people have it on their site. This discussion on stack overflow talks about how to remove a certain agent, but the first part of the solution gives good step-by-step instructions on how to use tag manager to identify them - just do that and stop there and you’re good to go.

Crawlability - Step 2

Once the crawler can get to your site, now you need to do 2 things to make sure that it has pulled in all our amazing product catalog content:

Entire Catalog Crawled - Make sure the crawlers are getting as much of your product catalog into their ‘aggregate product catalog’ where they store everyone’s inventory for processing and surfacing as answers (or product cards).

Catalog Crawled correctly - Answer engine crawlers are finicky especially with PDPs. They don’t render javascript, so anything that requires that feature is going to be missed. While they see the URL for an image, they don’t ‘look at’ each image for words to pull out. Many merchant’s reviews, variations and entire set of images

As you start to get to steps 8 and 9, and you are figuring out why your products aren’t showing up, start here in step 2 (after you’ve confirmed step 1 is good) and do some work with the tools previously mentioned to make sure your product was crawled. If you implement these next best practices, that will be increasingly unlikely.

Crawlability: Ensuring Your Entire Catalog is Crawled

Good news, the industry has adopted a standard, similar to robots.txt called llms.txt. Think of it as a guide to the LLM on how to crawl your site. In our world of Agentic Commerce, we want to use it to tell the LLM crawlers - here’s our product catalog and where everything is.

llms.txt also allows you to take longer content and compact it into markdown so the engines can fit large data into the context window. If you want to learn more, here’s where to start: the llms.txt standard specification.

We had a situation where a portion of a retailer’s site wasn’t being crawled. The reason why doesn’t matter, but it was in an area of the site, like ‘new releases’ or something like that, the crawler missed. Updating the site map and llms.txt virtually guaranteed the crawler would pick it up next pass and it did.

Crawlability: Catalog Crawled Correctly

Let’s say for the human shopper, you want a fancy javascript based interface for your variations, images and reviews. To provide the crawler with an alternative way to get the information in there that it’s not blind to you need to put on the page that data in a metadata format. schema.org is a standard for doing this. It allows your PDP to have two versions:

Human Shopper Version - What you see in your modern browser.

ACO Enhanced Version for Agentic Shoppers- we can take basic information that crawlers miss and use schema.org to put the data in metadata and the crawler will gobble it up like Pacman.

This is super convenient because we have this conundrum that the engines would love a 80pg PDF for each product chock-full of content and context and no consumer would want to slog through that. Datafeeds are one way to handle this, but for those engines that don’t take a datafeed, schema.org is a foundational way to get data into the engines that doesn’t interfere with the human optimized PDP.

Pitfall Tracker:

Between Steps 1 and 2 we’ve cleared off pitfall 1 from happening and if it does, you know how to fix it.

Basic Product Content - Step 3

Once you have made sure you aren’t blocking bots and your sites are optimized for crawlability, the next step towards optimization is working on your Basic Product Content.

Within Basic Content we have:

Hero images/gallery

Product Title/subtitle

Price

Any special offers for this product

Shipping times (could be calculated at checkout which is fine), any special offers on shipping, things of that nature. Usually on a PDP, this is the content before you get to variations.)

Short description and long description

Any special offers - BOGO, promo codes, spend $X get free ship, etc.

Step 3A: Add Context

Remember, in the ChatGPT Instant Checkout they give detailed technical specs of the amount of data you can send. Use it all, it’s free and unlike your PDP, you want to load it with context:

Here are the basic content areas you can fill up:

First, you want to look at this information, especially the short and long description and add some context. For example, if it’s a beauty product, does it have a scent profile? If it’s a sporting goods product is it for beginner/intermediate/advanced, is it indoor/outdoor. You also want to look at your images for this product and make sure any important product information (measurements, feature/benefit chart, materials, ingredients, etc.) are all also reflected on the PDP or if you want to keep your human-facing PDPs tight and keyword focused, you can put this information in the metadata or a datafeed (only some of the engines take datafeeds though, metadata and PDP are your biggest bang for the buck).

Step 3B: Nail Your Canonicalization in the Title

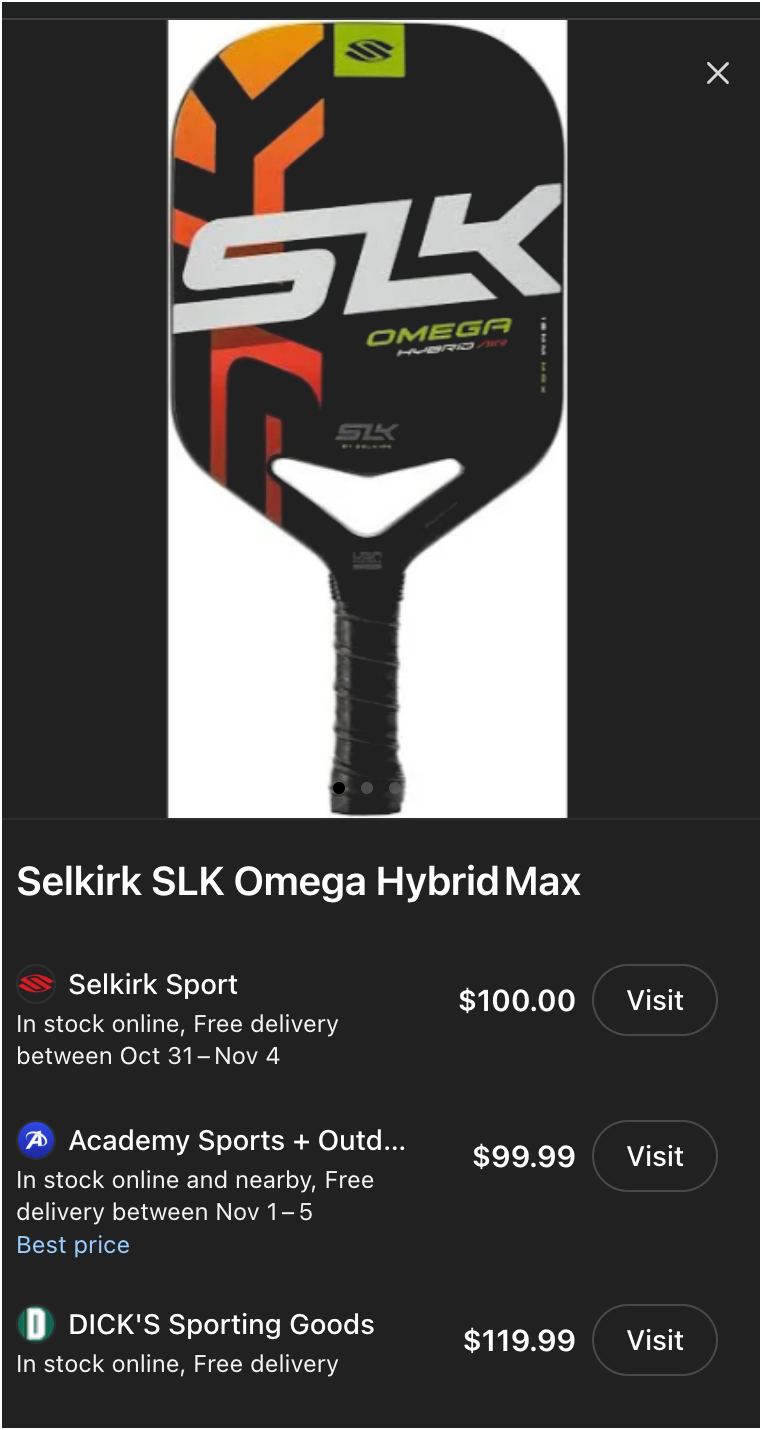

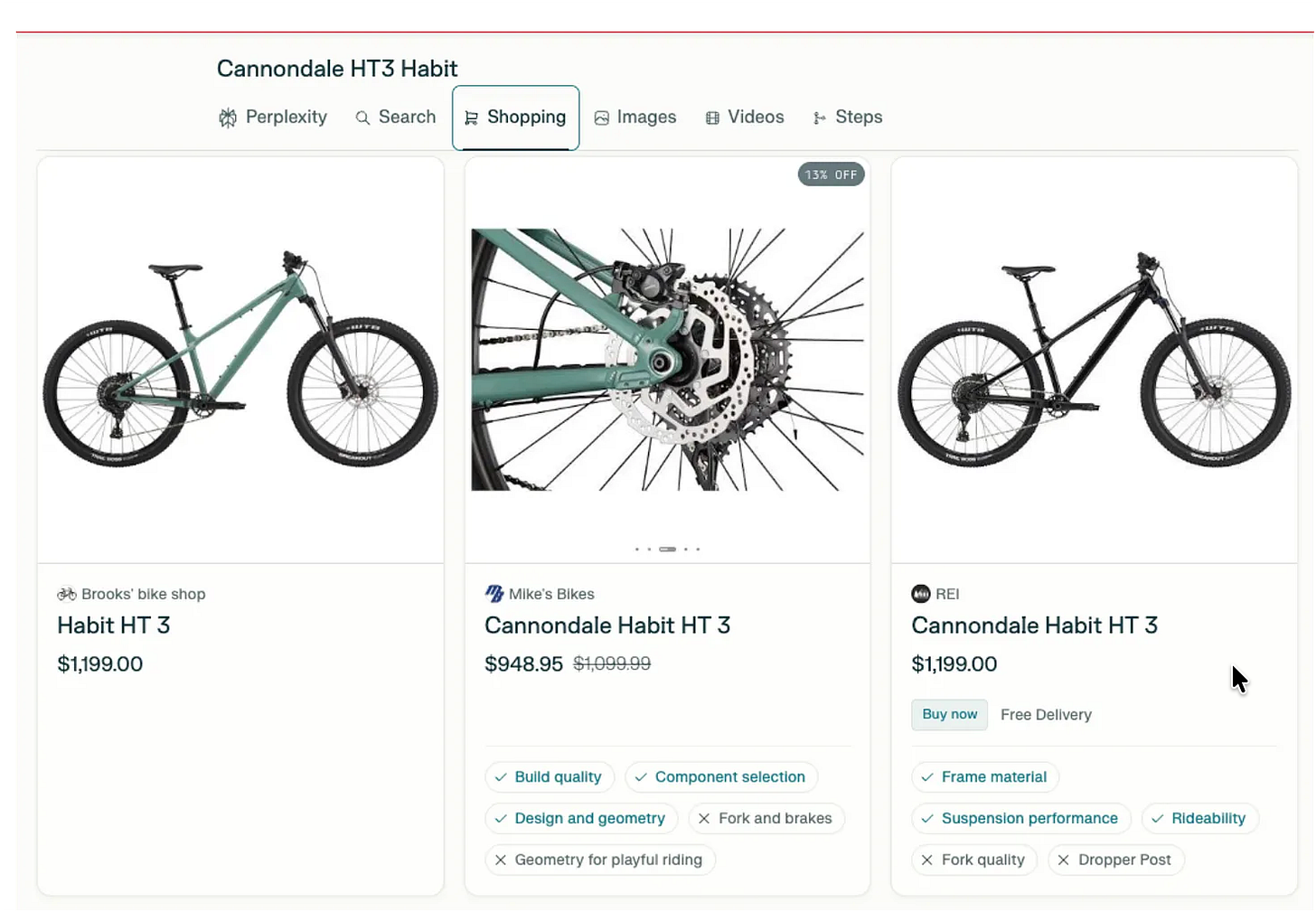

Second you want to really focus in on the main title. When the various Answer engines are canonicalizing your offers with their catalog, based on our investigative work, the first thing they do is try to match the title or a portion of it. Remember these pitfalls from Part II: (I’ve made them small as a reminder)

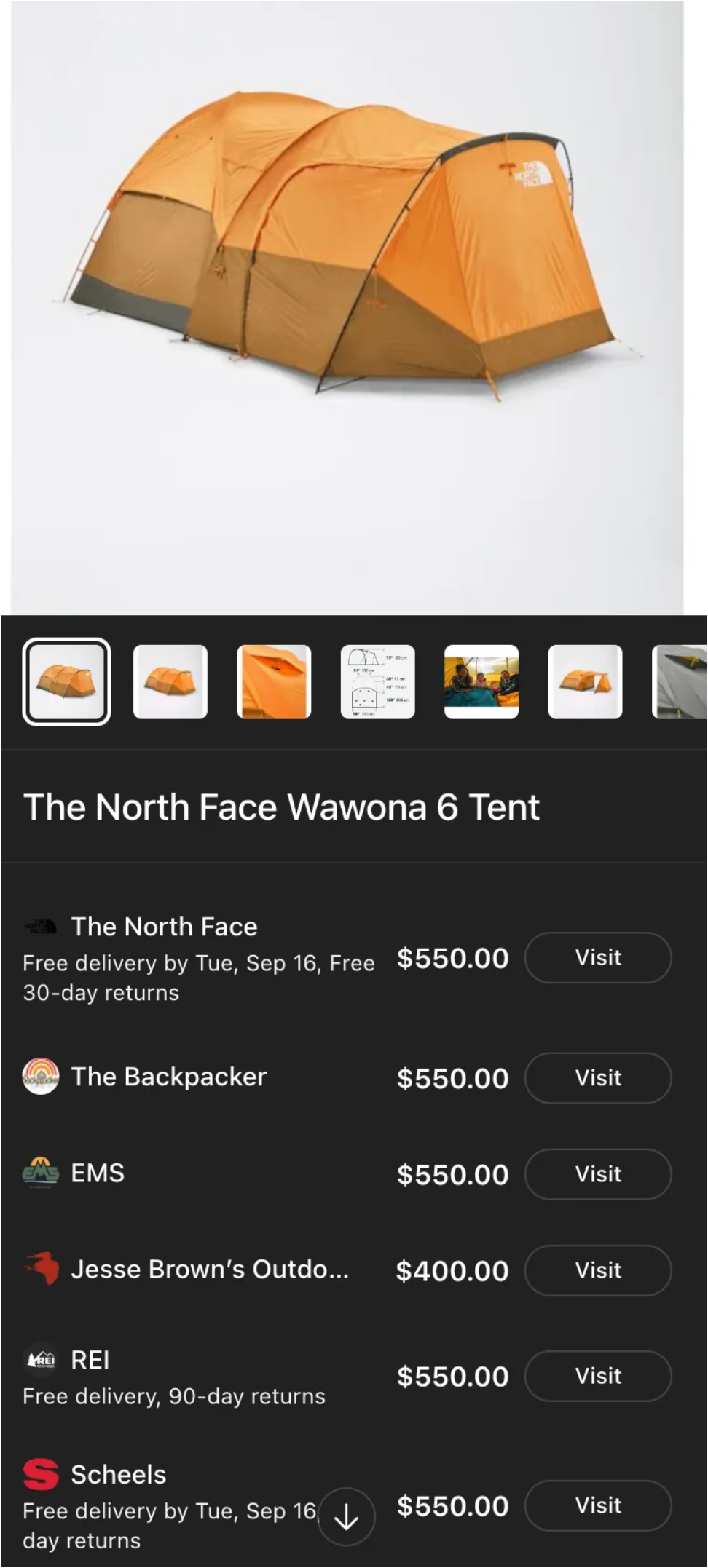

In the tent example, the fact that everyone else has adopted the product title for this tent of: “The North Face Wawona 6 Tent” except for Jesse Brown who has given their SKU the title: “The North Face Wawona Tent” (no number of sleepers/capacity designated), the engine canonicalizes The Jesse Brown 4-person tent with the 6-person tent. This means over on the 4-person card, they aren’t getting any traffic and over here on the 6-person card, they have a crazy low price, but it’s the wrong product - they are in a lose/lose situation thanks to their product title.

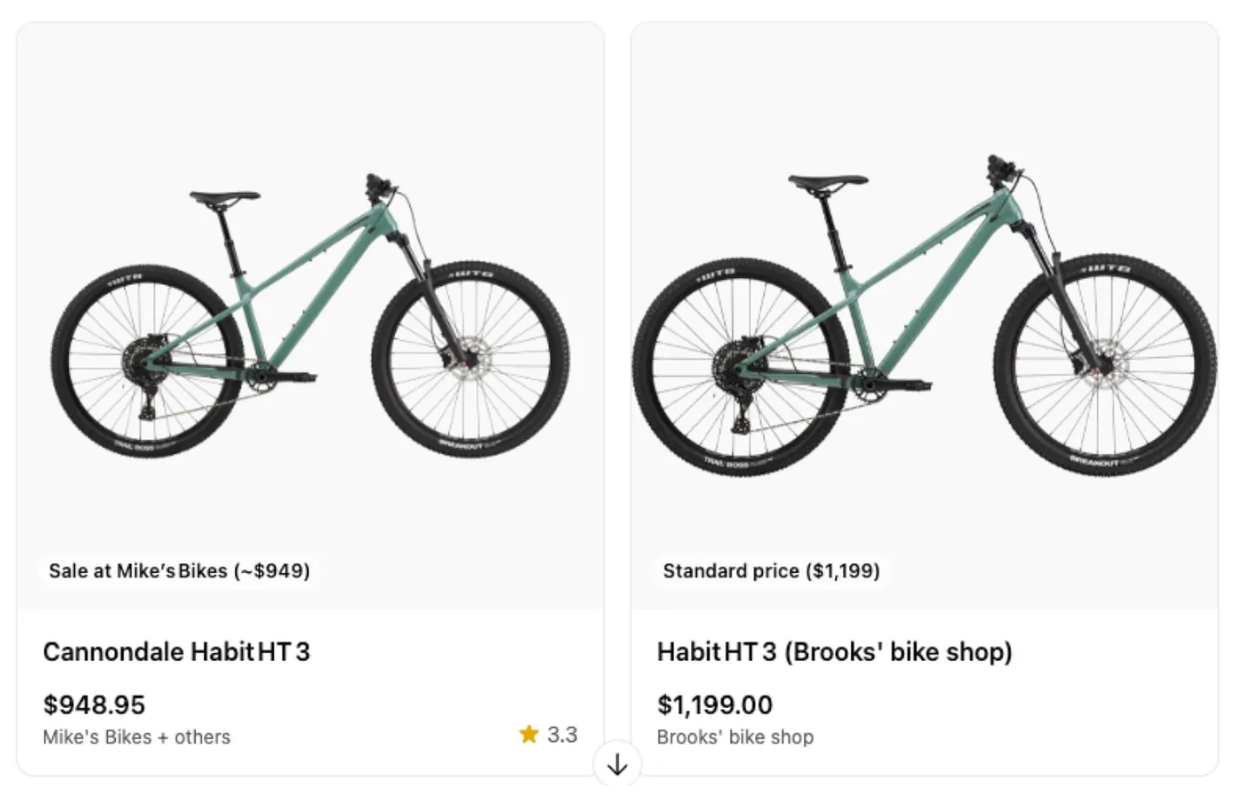

In the bike example, the standard from ‘Mike’s Bikes’ is to use the brand name and then model. Brooks doesn’t do that which causes a second product card to be created - it was not correctly canonicalized. Brooks got lucky here, as there are more products in the catalog, if it can’t be canonicalized, instead of creating a card, the offer will just be ignored. We’re seeing this happen with increasing frequency and it’s quite tricky to debug.

Step 3C: Pro Tips

Canonicalization is so important that what you want to do is reverse engineer it by working backwards. Go to your target answer engine and search for the product you are optimizing and search for it to get a product card.

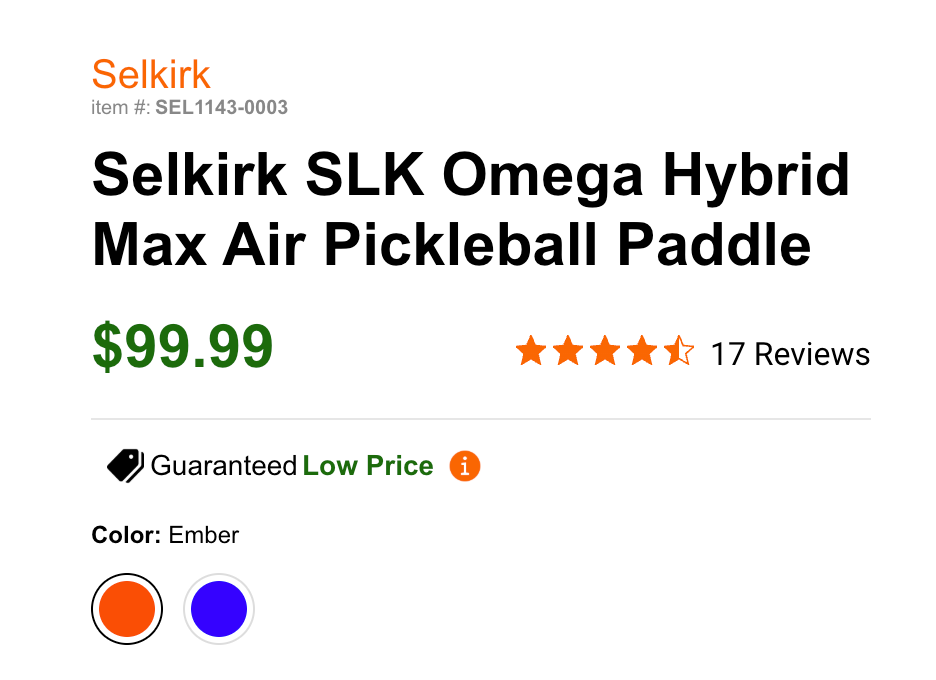

Let’s say I’m working for Pickleball Central and working on optimizing this paddle that has this title:

Looking at the product card on ChatGPT, I see these details and three offers (not mine! It’s ok, that’s why we do this).

Product card→

Offers→

If we assume that I’ve gone through steps 1 and 2, yet I’m not showing up, most likely we have a canonicalization problem. Do you see it?

The ChatGPT Title is: “Selkirk SLK Omega HybridMax”

My Pickleball Central Title is: “Selkirk SLK Omega Hybrid Max Pickleball Paddle”

The space between Hybrid+Max plus the words Pickleball Paddle are enough to throw the canonicalization off. Now you could argue this is a ChatGPT bug and they should fix that. Sure, but when the weather is cold, I don’t complain, I put on a jacket. In other words, your chances of getting this fixed at ChatGPT are near zero, why not just change your title either on the PDP or at a minimum in your feed, to follow this de-facto standard?

Want to make your head explode? Perplexity canonicalizes it WITH THE SPACE!

Therefore, unlike the ‘Feed 1.0’ era where you had one feed slightly different formats, what you realize quickly in ACO is each feed ends up getting ‘bespoke’/unique very quickly with different information.

For example, for the title for this paddle you may very well need to have:

Perplexity feed: title: Selkirk Omega Hybrid Max Pickleball Paddle

ChatGPT feed: title: Selkirk Omega HybridMax

Step 3D: Pro Tip: Images

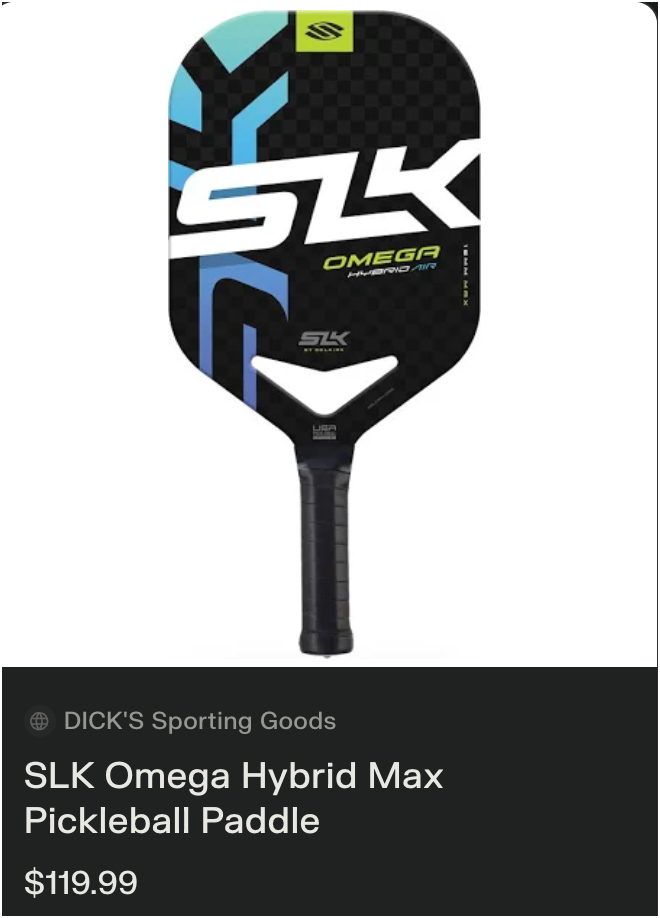

If you remember pitfall 8, that’s a basic product content issue. Here’s the pitfall example:

A couple of thoughts on images:

Unfortunately the way the target system picks a hero image is not up to you, however you can make sure your images are seen by the crawler. Many sites have a carousel or fancy gallery for picking images - these are typically javascript and you’ll need to provide accommodations for them in your metadata. Be sure to put your desired hero image first so it is seen as top priority by the crawler.

if your image has any text in it, provide that text in alt text for the image. While LLMs have the ability to extract text from images, they do not do this when crawling product detail pages because it’s a very heavy/expensive operation (uses a lot of tokens).

While debugging an image problem, focus on getting your hero image in the product card correctly (if not there, keep working on the metadata or feed). Once you have successfully got your hero image into the card, you can work on extra images from there. You want to limit the variables in these trouble-shooting scenarios.

Pitfall Tracker:

With proper care to your basic product content, you can ‘nudge’ the engines to canonicalize your products for the ‘Find’ part of the funnel and also provide some extra context to help surface your product in the ‘Research’ top of the funnel.

Finally, once you are canonicalized, experiment with adding more words to the title to strike a balance between ‘using max real estate’ and ‘breaking canonicalization’ and dropping off the card. Get on the card and then get sharp elbows.

In hindsight, one pitfall I should have included in the 13 Pitfalls that is really the biggest one is: “My product isn’t showing up, where is it”. Hopefully the examples of the tent, pickleball paddle and Cannondale bike have given you some tools and techniques to figure out why your products are not showing up and from there you can sleuth out what’s going on. Remember though - don’t skip steps 1 and 2 first as they could be the culprits!

Variations - Step 4

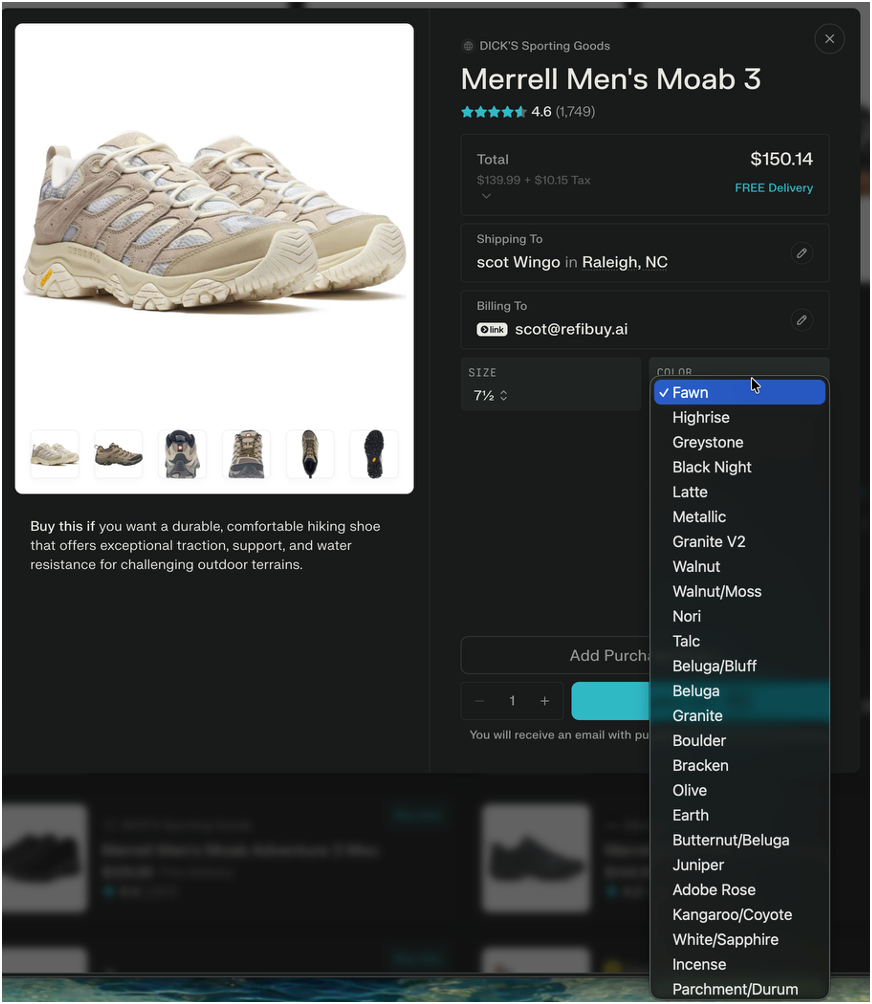

After you look at a standard PDP, the next big chunk of data is typically the variations. Sometimes called parent/child relationships or ChatGPT calls them Variants in their feed spec. 2-dimension (e.g. size and color) variations get complicated, but the more dimensions you add, the more complicated this gets. Not only does the data get complicated, but so does the…..wait for it…. Canonicalization. If you have a bunch or products canonicalized, now you need to sort through the variations. One solution is to take a superset -you just keep adding color when you see a new one. If you see a color you already have, you ‘fold in’ the sizes of that color as separate offers at that variation level and ‘fill out’ the variations. This is what perplexity does, look at all the colors, what do you notice?

First, note the order of the variations. They aren’t alphabetical. My guess is they are ‘FCFS/FIFO’ (first-come-first-served or first in, first out) what Perplexity saw first was Fawn, then Highrise, so-on and so-forth.

Look at Walnut and Walnut/Moss or Beluga/Bluff and Beluta and Butternut/Beluga. What about Granite and Granite V2 - should those be collapsed?

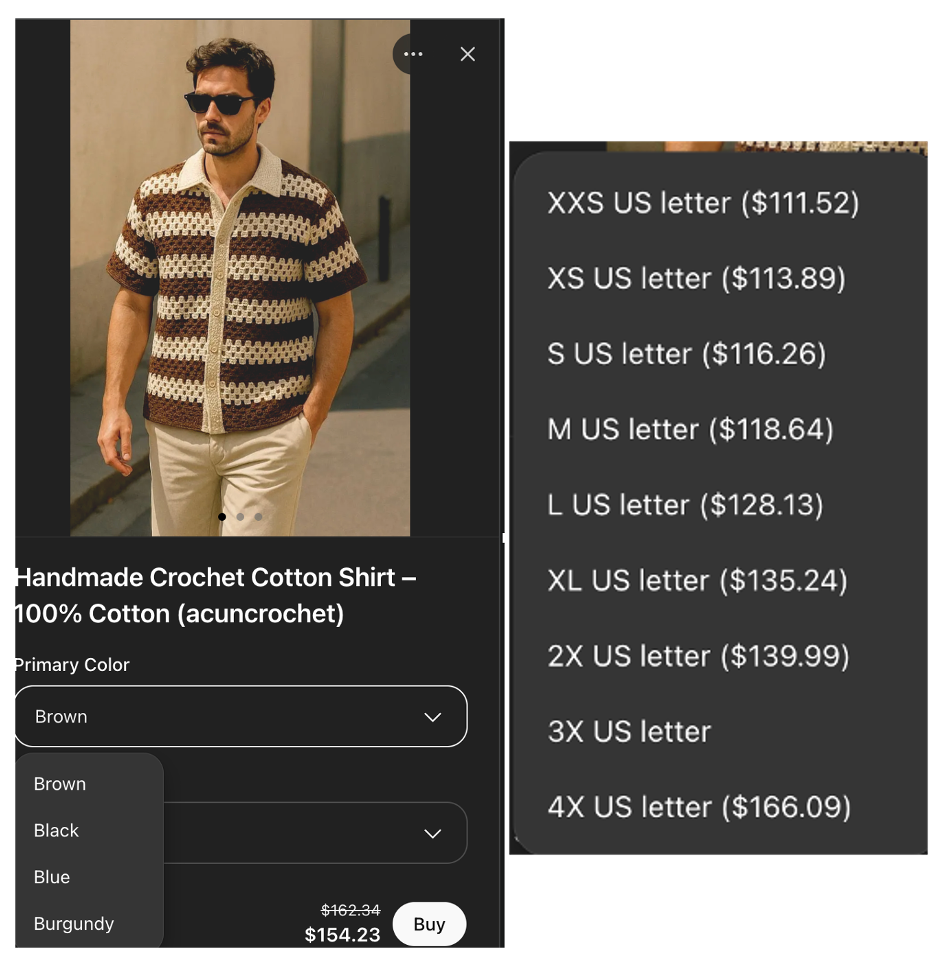

Let’s look at what ChatGPT does. You really only need variations when you get closer to a buy solution, so we need to look at Etsy inventory that has variations. At the time of this writing only Etsy is live on ChatGPT Instant Checkout:

This handmade crochet shirt has three variations: color, size and price.

What’s interesting is under ‘size’ it literally repeats ‘US letter’ which is probably the datafeed element “size_type” or “size system’ which should be used by the product card to know to just put XS/S. This is a good reminder that all of this is < 30 days old and changing in real time.

Anyway, because Etsy doesn’t canonicalize products (one listing is one product card), ChatGPT hasn’t had to canonicalize these, but they can cause problems.

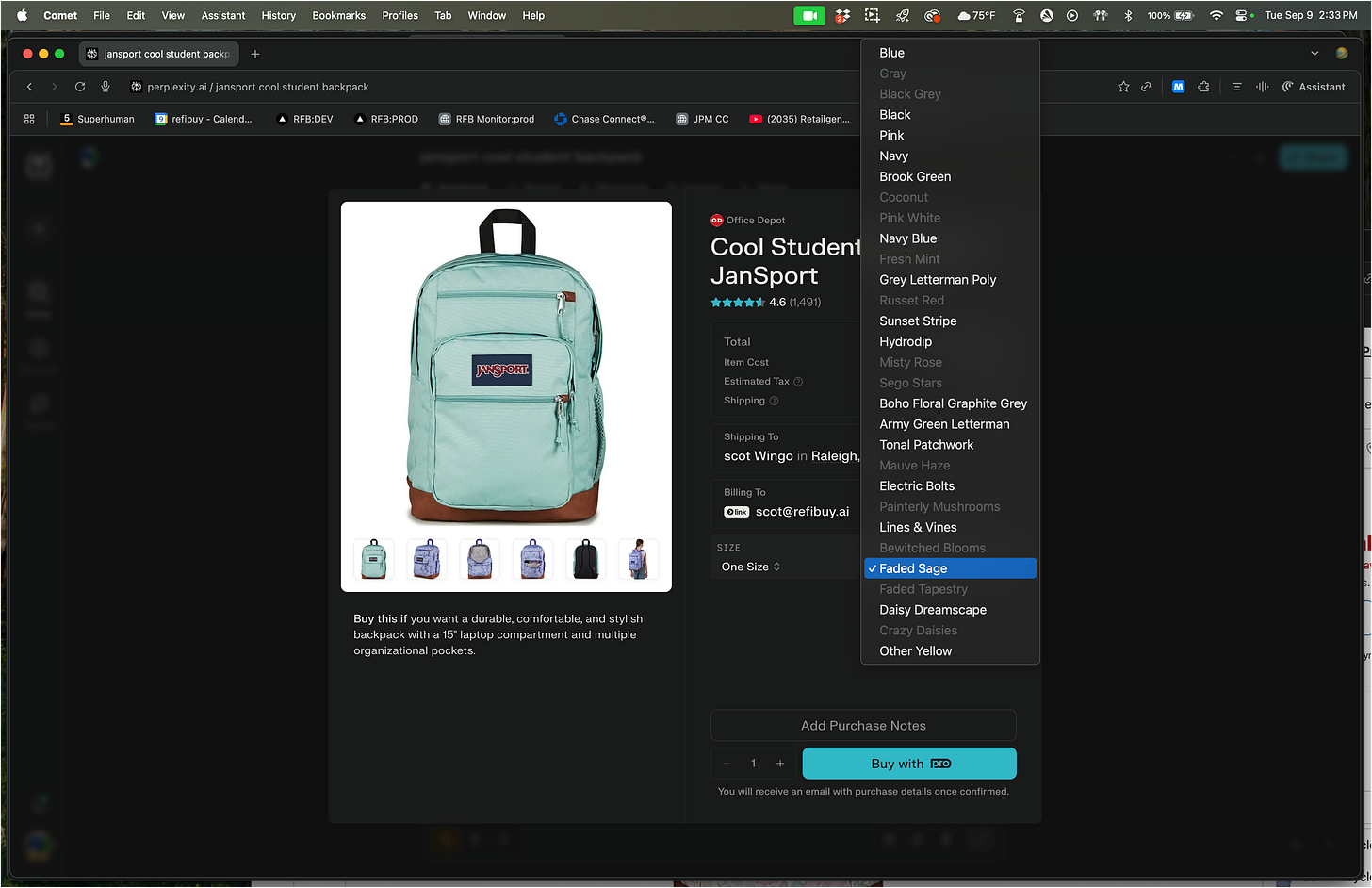

Back in Part 2, pitfalls, remember this lavender example where it’s not showing up, but Office Depot definitely has the lavender.

Tactic 4A: Not Showing On a Product Card? Next check your variations

Once you’ve worked through Steps 1-3 if you’re product still isn’t showing up on a product card, the next possible culprit is a variation problem. One idea that works with other systems is to look for a variation that is already on the product card and make sure your variation matches what’s there. Pay particular attention to spaces in slashes and copy the existing card variation exactly.

Tactic 4B: Javascript

Some merchants use a platform or ecommerce site technology that utilizes javascript. As mentioned in crawlability step 2, the answer engine’s web crawlers do not render javascript so they will not pull in your variations. The schema.org metadata has a mechanism for this situation to provide the crawler your variations in meta data. Details here.

Wrapping Step 4 and Pitfall Update

The good news - once you get through Step 4, we can stop worrying about canonicalization.

At this point with variations we’ve crossed 5 Pitfalls off the list:

Getting your variations correct on all SKUs will be the most finicky and hard solve problem you face in this process. Start at your easiest products (single variation) and work to the more complex double and triple variation scenarios. If you get stuck, post a comment here and either I or the community will give you some ideas on what to try next. 💪 you’ve got this! 🚀

Basic Attributes - Step 5

Once you’re through Step 4 - everything should be canonicalized, you are at least ‘showing up’ in product cards and your variations are clicking. Now it’s time to give the answer engines more content and context. This is where your old way of attributes is gone as of October 1, 2025 and you need to reset.

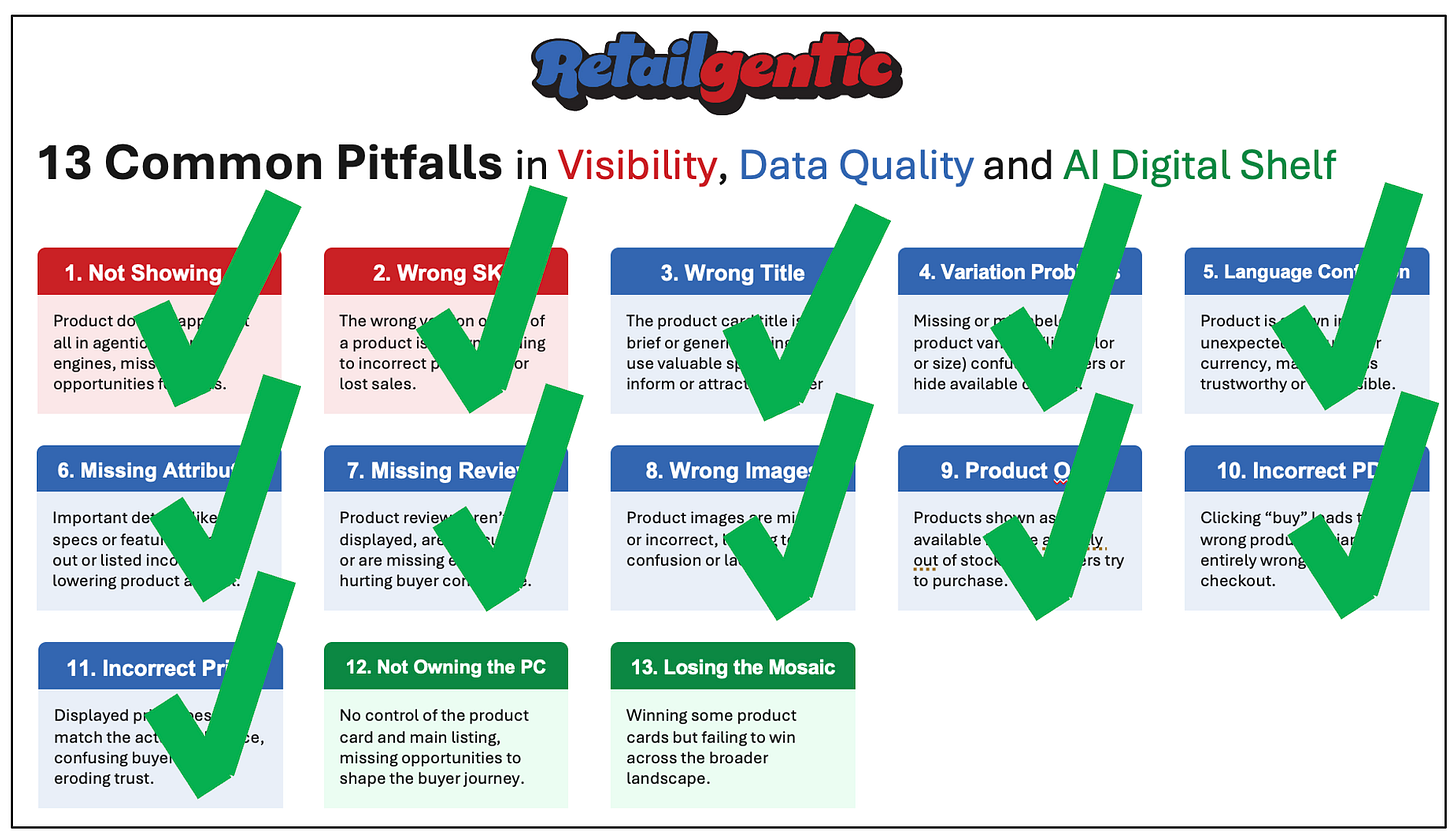

To see an example, let’s go back to Pitfall 6→

If you recall, we talked about how robust the category data is for this bile, especially on the Mike’s Bikes and REI product cards above. Let’s dig into this by looking at the REI PDP here.

It’s a very large PDP, so I don’t want to put it all here, but this is a taste of the level of content and context provided for this bike:

The check marks you see on Perplexity don’t come from this, they come from reviews, but the PDP content enables Perplexity to tie this all together. For example, maybe they’ve found a review about the importance/quality of a frame made of an alloy. You can image the user typing almost anything into the prompt and perplexity is going to be able to look up this content and know the answer. “What type of tires does it have?” What’s type of ride does it deliver”, “Is it good for technical trails or street biking or ?” Does it include pedals?

This is the North Star of PDPs. If you don’t want this much text on your PDPs, that’s understandable, you can have three ‘versions of your PDP:

Human PDP - this is the visible HTML on your website - image heavy, text light

GenAI Crawler PDP - this is the visible HTML minus javascript plus metadata. Most SEO strategies underutilize the metadata capabilities. This is your place to put a ton of content that doesn’t visually jam up your PDP, but the crawlers are designed to look for it there and in that format as they are all following schema.org conventions.

Feed PDP - If there’s something you can’t figure out how to get into the metadata, or data around inventory and pricing you want to update more frequently, it should go in the feed for the engine where available.

Expanded Attributes - Step 6

Once you’ve taken your basic attributes and expanded them out to provide more content and context, like the Bike in the REI example, you want to put a lot more content in there. Try to think outside of specs and into feature/benefits and also use cases you know about your product or consumer short-hand.

What types of event does your item work at? What does it not work at?

What scenarios would the consumer reach for your product? What does it pair with? Mining positive review data to pick up social cues can be a helpful source of content here. There are no ‘standards’ with expanded attributes, you can make up as many and be as creative as you want.

Color palette

Scent profile

Pairs with

Frequently purchased with

Feature/benefit example: Has 21” wheels which makes it great at getting over rough vegitation

Content like this.

Extra Product Content and Other Product Content Catchall - Step 7

If you’ve looked at the ChatGPT feed spec they have ‘recommended’ content around reviews and Q+A. For reviews most retailers and brands have great review content. This typically is going to be more on-point, current, verified and positive to the product than random reddit reviews pulled in by the engines, so we strongly recommend providing it. If your existing reviews use javascript, and most do, they are most likely not making it into the crawl. You can solve that with metadata and also including it in the datafeed here. Metadata is more in your control and speedy right now, so we recommend starting there.

Multi Lingual /GlobalSites

If you are global, the engines are not sophisticated enough to understand multi-language if there’s a clever user-interface mechanism (e.g. flags at the top of the page or an IP location detector). The crawlers frequently are thrown off by these mechanisms vs. switching based on domain names (e.g. store.com is english, store.co.uk is UK, store.de is German and so-on). Fortunately, schema.org has a mechanism for this detailed here.

Price/stock Issues

If your prices are not showing up correctly, while today’s web crawlers are 10x better than previous generations because they themselves are powered by LLms, they can still sometimes miss strike-through pricing and definitely will miss ‘add to cart’ pricing or ‘add this dyson product get a $100 gift card’ type promotion. You can specify some of this in the metadata and even more in the feeds. As for stock, again, you need a clear text indicator, not an image, not a javascript that a product is out of stock. Greying out the buy button most likely won’t be sufficient. You can also put this data in the metadata which is recommended.

Wrong PDP

If, like in pitfall 10, the wrong product is being linked, that is unusual for a crawler and indicates that is most likely a quality problem with your feed, or the engine has picked up the feed (there is a lot of cross contamination of google shopping feeds) that has a bad field or is old and causing problems). Start at the feed level and if that doesn’t solve it look at your metadata.

11/13, 2 to go!

That brings us to checking off 11 of our 13 pitfalls and we have our last two, the most strategic, to go! You can’t get to the fun strategic stuff if you don’t execute on these first 7 steps of the playbook and get your products all beefed up, full of content and context and happily canonicalized with tidy variations.

Product Card Optimization

Once your ‘offers’ are on the product cards, you then have a process of optimizing to ‘work your way’ up on on the product card. The algorithm for each of the Agentic Commerce Engines is different and constantly changing. The best advice I can say is if all things are equal, compare your PDP/metadata to the competitor’s PDP and try to objectively see where it is outperforming your PDP/metadata and keep adding content and improving your context.

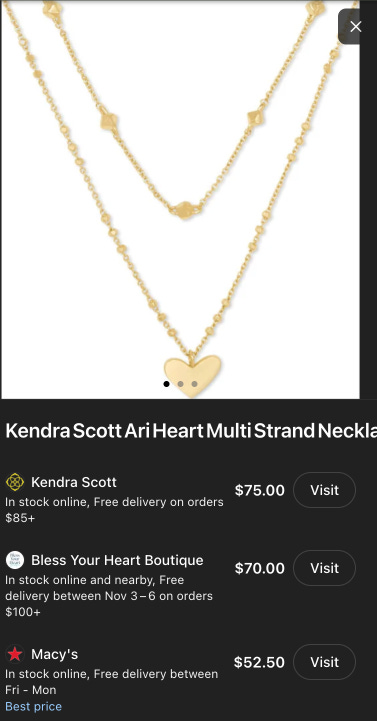

Here’s an example:

Here you see Kendra Scott (the brand) owns the product card at $75 with the highest price, then Bless Your Heart at 470 and Macy’s with the lowest price at $52.50, but showing up third. Note the ‘Best price’ designation on the product card. Also note the shipping/delivery piece:

Kendra Scott - “In stock online. Free delivery on orders $85+”

Bless Your Heart - “In stock online and nearby, Free delivery between Nov 3-6 on orders $100+

Macy’s - “In stock online, Free delivery between Fri-Mon”

If you go look at the Macy’s PDP, the Bless Your Heart PDP and the Kenda PDP, some observations:

UGC: Kendra has ~400 reviews, Bless Your Heart: 0, Macy’s 1, Kendra has a FAQ, its not clear if it’s picked up as it looks like javascript - we’d have to inspect the metadata to dig deeper.

Basic Attributes: Kendra has the most robust attributes

Extra Attributes: Kendra wins here - for example, they have a very detailed “Jewelry Care” section that details the metallurgy and care

Other: Macy’s has a ‘low stock’ flag on the product

Either the union of all those areas where Kendra is ‘winning’ or one in particular has caused the ChatGPT algorithm to pick Kendra over Bless Your Heart and Macy’s. If you find yourself in a situation where you aren’t winning the product card like this example, my advice is to go back through steps 3-7 to improve your chances. Remember the foundation - content and context are king.

Product Card Monitoring - Step 8

Once you’ve gone through all the work of canonicalizing your cards to make sure they show up, and optimizing to 'own the product cards’, you aren’t done. The engines are launching new major models annually and major models at least once in the middle of that, so you have a model update 2-4X/yr. If you are on 2 engines, That’s 4-8 updates/yr to navigate. I think by Holiday 26 you’ll want to be on: ChatGPT, Perplexity, Gemini, Copilot, Meta, Grok and Claude. That’s 7 engines or 14-28 changes/yr. On top of THAT, depending on your category, your catalog is changing too. If you are in fashion/apparel you typically have a huge portion of your catalog changing 3-5 times a year. Even non-fashion retailers have 5-10% catalog movement a year as products go in and out of stock, new products added, old products deprecated. On top of THAT, the competition on the product card isn’t static, they are always working, improving their content to try and bump you out of the top slot.

As all three sides of the equation (your product catalog, agentic commerce engine algorithms, competition) move around, you need to constantly be watching the product cards to make sure you maintain your leadership position and take action to stay on top. It’s up to each organization to measure this priority against all the others as well as resource availability. Once ChatGPT Checkout is live and other engines follow suit, there will be closed-loop ‘sales rank’ data that can be one way to prioritize what to monitor and how often.

Digital AI Shelf Optimization - Step 9

In traditional e-commerce, brands frequently utilize digital shelf companies that help understand the retail ‘footprint’ of competing brands. The classic example is Pampers vs. Huggies. These companies will scan (crawl) all the top retailers for common search terms, look at how the hero brand is showing up against the challenger brand and then using clues like sales rank to compare ranges of sales for each product and watch for any movement in market share over time.

There’s a real probability that GenAI Answer Engines continue their exponential consumer growth and become a significant portion of online and retail influence faster than everyone is anticipating. If that’s the case, these early investments will pay huge dividends.

If your organization buys into that, then starting to not only track your own position, but that of your top priority competitors can be helpful. Once you know your sales on a channel (via a checkout type solution), you can then make an educated guess on the competitor’s sales for any given card, add that up and you get their ‘channel sales’. for each engine.

Admittedly this is a very distant 9th priority at this point in time, but given how fast this space is moving, I’m starting to change my perception of time to pull-in things more aggressively. 5yrs ago,I wouldn’t have mentioned a distant possibility. Today, I wouldn’t be surprised if this was something we cared about in less than year. 🤷♂️

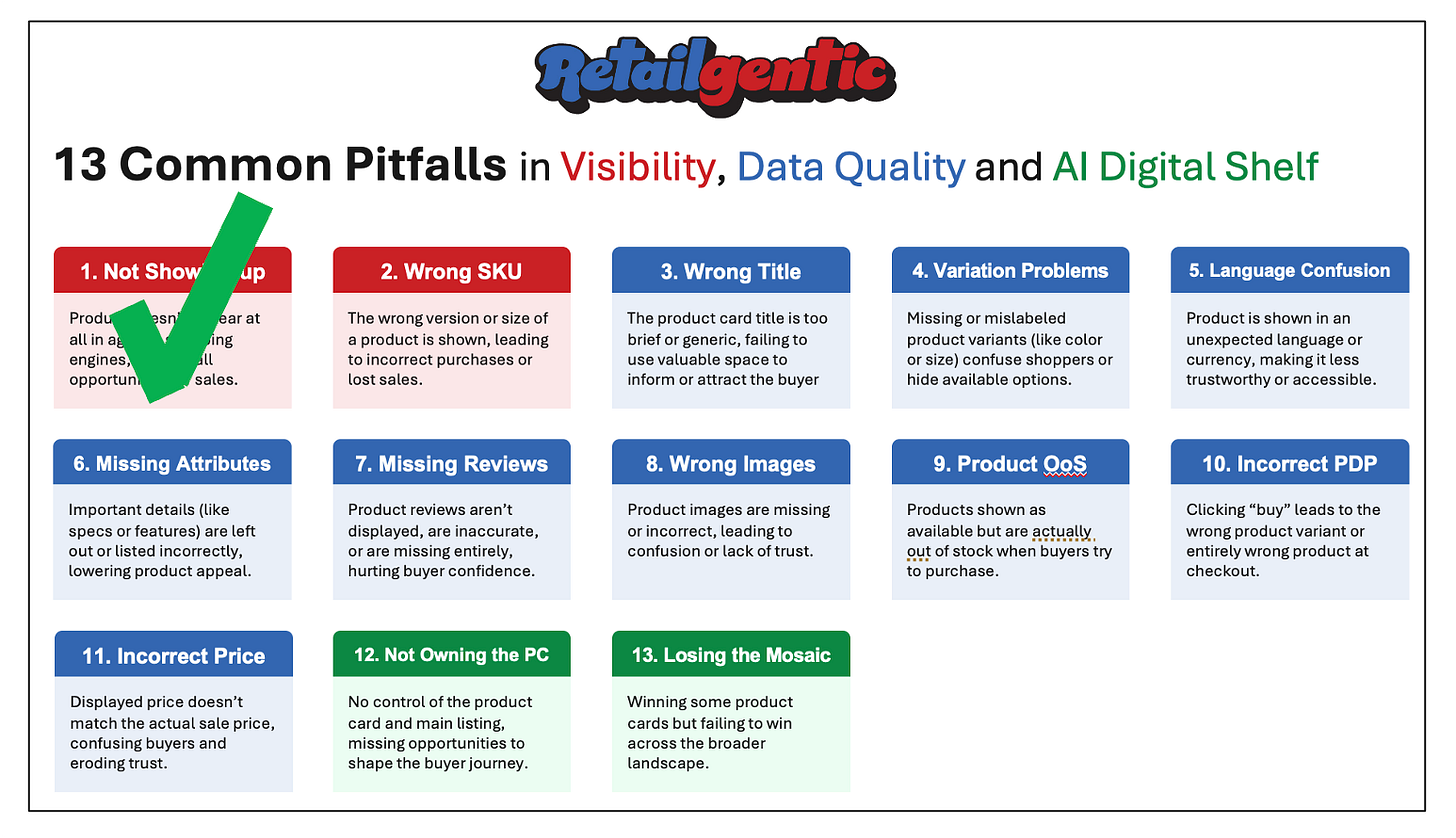

Before we wrap up this mega-series, one last check-in with our pitfall tracker:

Ah, #SoSatisfying to see all those ✅ 😎

Conclusion

Congratulations, you have completed our comprehensive 4-part series where we dove deep into Agentic Commerce Optimization. If there were such a thing as ACO certification, you’d be certified and ready to go! Let’s recap the journey:

In Part I, we Introduced GEO→ACO and the goal, the ‘why’.

In Part II, we introduced the 13 common Agentic Commerce Pitfalls and showed real-world examples of each so you can identify them more easily for your products.

In Part IIIA, we covered four foundational concepts for ACO:

Introduced the 9-step sequential system

Reminded you of the importance of owning the product card

Walked through a backgrounder on canonicalization

Overview of the importance of Content and Context

Finally, in Part IIIB, we introduced the 9-step ACO Sequential Playbook and took you through it in detail showing how you can apply it to fix the 13 pitfalls from Part II for your product catalog and your product cards.

At this point, you are ready to go out there, own those product cards and 🦵 some Agentic Commerce 🫏 for Holiday 2025! Happy ACO’ing!