The Agentic Era Begins: How Reinforcement Learning and GenAI Will Redefine Retail Software

Two predictions on the future of retail enablement software. Plus three Essential AI Concepts Every Commerce Leader Should Understand

Ecommerce Software Backgrounder

As an early builder of a EcommTech, RetailTech, Ecommerce Enablement provider at the beginning of the SaaS era, I’ve seen hundreds if not thousands of software vendors born in that period. As an industry, the software providers that support retail have been in a bit of a rut for the last 5-10 years. That’s all about to change in a dramatic way.

Traditional SaaS Era (2001-Today)

In this first of the ecommerce timeline eras, we have traditional SaaS, like ChannelAdvisor. The vendor comes up with an initial solution and then endlessly adds functionality to keep up with the fast moving pace of digital commerce. SaaS solutions are hosted vs on-premise and, typically, the revenue model is based on seats and maybe something tied to ad revenue, or sales-driven or something of that nature. This ‘era’ began with Omniture and ChannelAdvisor in 2001/2002 and is still going strong ~25yrs later.

MACH (2020-Today)

In 2020 the MACH alliance came on the scene to promote:

Microservices based

API first

Cloud-native SaaS

Headless architecture

This movement started to realize SaaS was getting bloated and some people didn’t want the ‘whole thing’ - they wanted some of the lego blocks inside of the SaaS app, but not all of them. This ‘breaking apart’ of the monolithic SaaS apps is commonly called composability where the buyer can buy the building blocks they want without the pieces they don’t. Also implied in this system is the idea that systems should work together. This is a reaction to traditional SaaS apps building closed garden type systems to try and limit/reduce dhurn.

Agentic Era (2025+)

I believe we are starting the Agentic Era for commerce enablement software and what I want to cover today is an important way it’s going to be much different than anything we’ve seen before in a very positive and a mind blowing way that we’ll build to.

When I say Agents in this and all context, I’m talking about the Agents that were introduced in Q4 of 2024. This means if a company that was founded in 2020 says they are Agentic, your Spidey-sense should tingle because that’s highly unlikely, what I call Faux-gentic, but someone used Agentic washing the other day which is pretty good too. In any case, most likely they have some old-school ML technology they have either rebranded or wrapped for more marketing than architecture reasons.

The authenticity of ‘Agentic-ness’ is going to really matter going forward and part of my goal here is to explain why by highlighting the benefits from Agentic and how it is

Introduction to Three Essential GenAI Concepts

Don’t worry we’re not going to get into the weeds here, but there are three important concepts born out of the GPT/GenAI era that you need to understand to wrap your head around the key new benefits of the Agentic Software for commerce enablement era. They are Training vs. Inference, Modern Agent Architecture, and Reinforcement Learning with AI Feedback (RLAIF).

Training vs. Inference Time

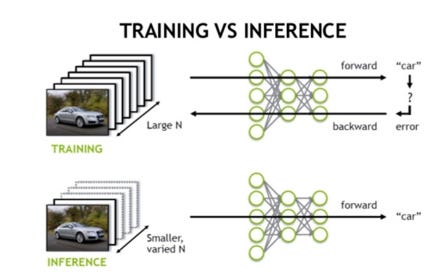

In the GenAI World going back to ML, but also in the GPT era, the system had a clear point in time where you trained it for a long time on a large data set (as illustrated below).

And then once trained, you would enter ‘inference mode’ where you could take the trained system and have it ‘infer’ (thus the inference phase) what it was trained on. In this picture they are training an image recognition model on cars so that it can identify ‘is a car’/’is not a car’ in the inference cycle.

Historically the Training phase was dynamic where the system can ‘learn’ new things and the Inference phase was static. For example, if it got a Cybertruck confused and said ‘not a car’, at inference you couldn’t do anything, you’d have to start a completely new training cycle and throw a bunch of Cybertrucks in there, then the after training it would have learned and fixed the error.

Modern (Late 2024+) Agent Architecture

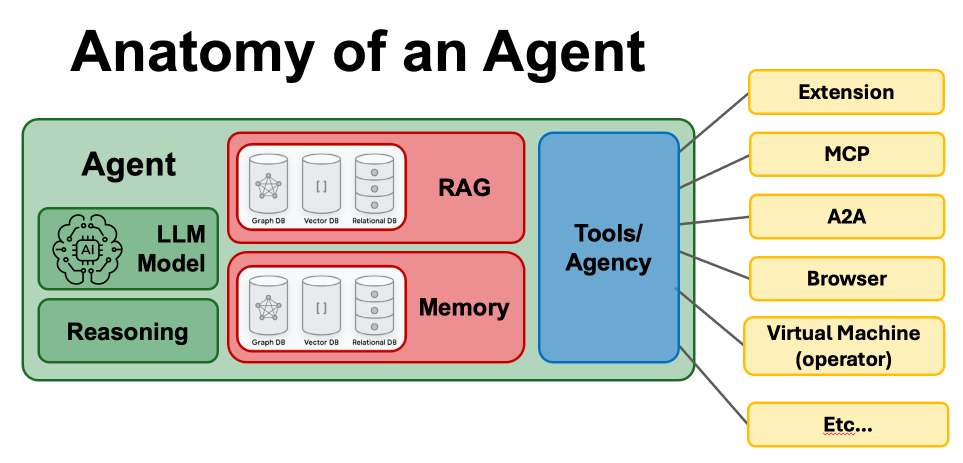

Modern agents (circa q4 2024) have this architecture:

At a high level, they have:

LLM - Think of the LLM as the brain of the system

Reasoning - You’ve probably played with models like o3 that are able to pick apart problems, decompose them and tackle them in phases.

RAG - Retrieval Augmented Generation - think of this as a little database that has subject-matter-expertise. For our purposes, let’s say it knows all about retail and ecommerce. e.g. What’s a SKU, what’s a product catalog, what’s a variation, etc.

Memory - Memory allows the agent to learn at inference time. Put a pin in that for now 📌

Tools - By definition, Agents can ‘do stuff’ and the tools your agent has give it a range of actions it can take.

Reinforcement Learning Feedback (Human or AI)

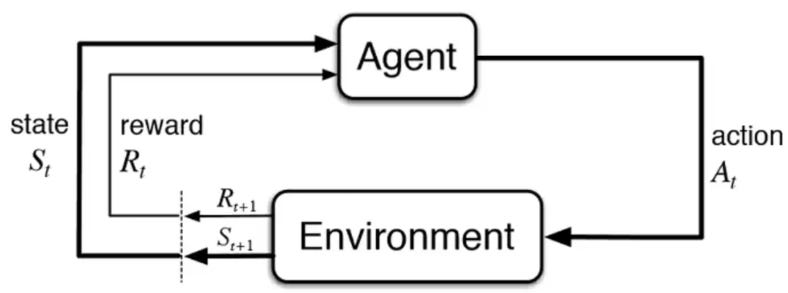

Back to memory in agents. Because Agents have memory they can learn at inference time based on feedback. This is called Reinforcement Learning (at inference time) and looks like this:

This happens much like a human learns in a trial-and-error model:

The agent takes an action and expects a change from that action

If the change is what it wanted, it is rewarded, or it’s mission is successful and it’s done.

If the change is NOT what it wanted, it tries something else until it hits the reward phase.

There are two ways the feedback can work:

Human feedback - A human takes the output from the system, evaluates it and either gives it a 👍 or 👎

AI feedback - A different AI (as our example, a second ‘judge/evaluator’ agent) is able to observe if the original Agent’s action was a 👍 or 👎

In GenAI-land they call this:

RLHF - Reinforcement learning with human feedback

RLAIF - Reinforcement learning with AI feedback

Both of these can happen at Training time or Inference time - so sometimes you’ll see that qualifier in there such as: Reinforcement learning with AI Feedback at inference or Inference reinforcement learning with AI feedback.

Putting Them All Together: Inference Time Learning!

Now if you swirl together these concepts of agents and RLAIF, one output is you now have a GenAI-powered system that can learn at inference time, it doesn’t have to go through this long and painful training cycle.

When can Agentic RLAIF at Inference time be most useful?

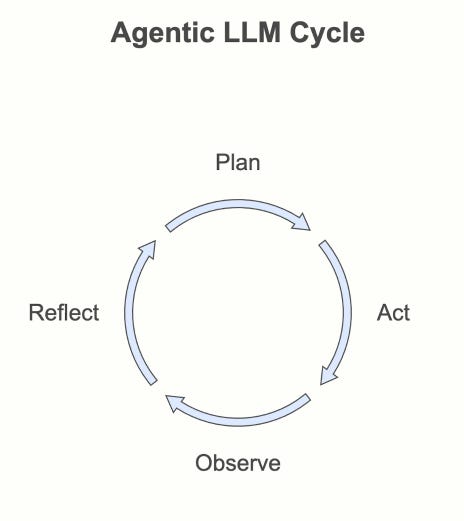

This system is ideally suited to closed-loop systems where the Agent, or team of Agents can reason out a plan, take an action, observe what happened and tweak/realign the plan based on the observed outcome:

Because humans are slower than computers and more expensive, ideally you can take humans out of the loop over time and get to a completely ‘human observer’ vs. ‘human reinforcement’ system.

Examples of problems where RLAIF excels…

If you think about areas of our World that have closed loops, here are some examples:

Games - Games have zero sum game outcomes (one winner, one loser) - the earliest versions of RLAIF (Deep Mind AlphaGo vs. Lee Sedol) used early versions of the techniques mentioned.

Robotics - A robot hand has to separate apples and bananas and a vision system can be setup as the AI feedback. The real-world is full of ‘easily verifable outputs from actions’

Code generation - One of the reasons companies like Cursor, Windsurf and Replit are growing rapidly is their solutions not only work, but coding has a closed-loop element to it. The agent writes code and either compiles/runs or not - that’s the first closed-loop feedback. Once it runs, then does it do what it is supposed to? That’s the second closed-loop feedback.

There is another area that, if you’re reading this pod, YOU are very familiar with where you see closed loops and zero-sum game type situations a lot.

Yep, you guessed it, retail.

Putting it All Together: RLAIF + Agentic + Retail

The world of retail and ecommerce is chock full of scenarios where the combination of RLAIF and Agentic AI can solve some of our hardest problems. Here’s some examples

Advertising - At the head of all the ad networks are some measurable goals such as impressions, conversions, budget and ROAS. Agentic models with RLAIF are very good at this

Marketplace Listings - If you are trying to list something to a marketplace, like Amazon and want to make sure it’s listed and optimize, that’s a closed-loop process.

Marketplace optimizations - If your strategy is to own the Amazon Buy Box for 100 SKUs, that’s a closed loop process.

In fact, our world is so full of them I could go on much much longer, but you get the idea.

Prediction 1….

All of this setup is so I can share the first of two

Prediction 1: The Venture Capital frenzy around Agentic coding companies will work it’s way to Agentic Retail companies in 2026. Then we’re going to see a Cambrian explosion of new software companies that are ‘Agentic native’.

The Mind Stretching Dawn of the Agentic Era in Commerce Enablement Software

As an industry, in the retail world, most retailers, brands, agencies haven’t experienced these systems that learn on their own using RLAIF. In traditional SaaS and MACH you can get flavors of this. Bidding systems have been around since the 90s, but comparatively those are stone-aged hammers and the next generation is going to be precision robot lasers.

Here’s an example, imagine you are using agentic marketplace software. It automatically detects a change in the API to Amazon, and adapts to it. It then makes sure all your products are showing up correctly and if they aren’t it learns how to fix it. You check in on it and it gives you an update on all the changes it detected and fixed, the tweaks it made to implement your strategy and then recommends some new strategies based on moves it has seen from your competitors. It realizes you are low on some key SKUs and has pre-prepared a re-order from the vendor. It has also recommended 30 new products you should think about listing on Amazon.

And finally….

The real 🤯 is the agents are all basically clones - they have the same memory. The changes to the Amazon API, etc are now ‘known’ by the agent not only for customer #1, but also for Customer #2 through N. The whole system learns and get smarter. To be clear, they are NOT sharing customer data in any way, but they are sharing learnings. This would be like colleagues getting together to share some best practices. This will largely be at a tactical level with the customers setting strategies (that like any other data, are NOT shared).

In fact, the more customers that work with the vendor, create more data, more touch points, more closed loops and basically an Agentic Network Effect. The more customers the company has, the more closed loops are learned, the better the solution becomes -rinse and repeat.

This leads us to my second and final prediction:

Prediction 2: Agentic Native solutions will be 8-10x productive as traditional SaaS and 3-5x productive as MACH oriented solutions

Conclusion: The Agentic Age is Here

We are at the start of a huge shift in how the software that helps brands and retailers manage their online businesses. How that software is built, bought and operated is going to change very rapidly. For over 20 years, we’ve lived through the evolution from traditional SaaS which gave way to MACH with more modularity and composability.

Now, Agentic software with inference-time learning, autonomous execution, and shared memory architecture is set to redefine the very nature of what software does for retail.

At first this shift will feel incremental, but because of Agentic’s ability to learn, it will quickly become clear that it’s exponential. Agentic-native platforms will not only automate but also optimize themselves in real-time, creating adaptive systems that learn across customers and improve continuously.

Perhaps the biggest change will come to the user experience/user interface, but that’s a topic for another day and another post.